Microservices are great and all that, but you know those old fashioned batch services, like a data processing service or a cache loader service that should run with regular intervals? They're still around. These kind of services often end up on one machine where they keep running their batch jobs until someone notices they've stopped working. Maybe a machine where it runs for both stage and production purposes, or maybe it doesn't even run in stage cause no one can be bothered. Easier to just copy the database from production.

But we can do better, right? One way to solve this is to deploy the services to multiple machines, as you would with a web application. Use Octopus, deploy the package, install and start the service, then promote the same package to production, doing config transforms along the way. Problem then is that we have a service that runs on multiple machines, doing the same job multiple times. Unnecessary and, if there's a third party API involved, probably unwanted.

But we can do better, right? One way to solve this is to deploy the services to multiple machines, as you would with a web application. Use Octopus, deploy the package, install and start the service, then promote the same package to production, doing config transforms along the way. Problem then is that we have a service that runs on multiple machines, doing the same job multiple times. Unnecessary and, if there's a third party API involved, probably unwanted.

Leader election to the rescue

Leader election is really quite a simple concept. The service nodes register against a host using a specific common key. One of the nodes is elected leader and performs the job, while the other ones are idle. This lock to a specific node is held as long as the node's session remains in the host's store. When the node's session is gone, the leadership is open for taking by the next node that checks for it. Every time the nodes are scheduled to run their task, this check is performed.

Using this approach, we have one node doing the job while the others are standing by. At the same time, we get rid of our single point of failure. If a node goes down, another will take over. And we can incorporate this in our ordinary build chain and treat these services like we do with other types of applications. Big win!

Using this approach, we have one node doing the job while the others are standing by. At the same time, we get rid of our single point of failure. If a node goes down, another will take over. And we can incorporate this in our ordinary build chain and treat these services like we do with other types of applications. Big win!

An example with Consul.io

Consul is a tool for handling services in your infrastructure. It's good at doing many things and you can read all about it at consul.io. Consul is installed as an agent on your servers, which syncs with one or many hosts. But you can run it locally to try it out.

Running Consul locally

To play around with Consul, just download it here, unpack it and create a new config file in the extracted folder. Name the file local_config.json and paste in the config below.

{

"log_level": "TRACE",

"bind_addr": "127.0.0.1",

"server": true,

"bootstrap": true,

"acl_datacenter": "dc1",

"acl_master_token": "yep",

"acl_default_policy": "allow",

"leave_on_terminate": true

}

This will allow you to run Consul and see the logs of calls coming in. Run it by opening a command prompt, moving to the extracted folder and typing:

consul.exe agent -dev -config-file local_config.json

Consul.net client

For a .Net-solution, a nice client is available as a Nuget-package, https://github.com/PlayFab/consuldotnet. With that, we just create a ConsulClient and have access to all the API's provided by Consul. For leader election, we need the different Lock-methods in the client. Basically, CreateLock is creating the node session in Consul, AcquireLock is trying to assume leadership if no leader exists, and the session property IsHeld is true if the node is elected leader and should do the job.

var consulClient = new ConsulClient();

var session = consulClient.CreateLock(serviceKey);

await session.AcquireLock();

if (session.IsHeld)

DoWork();

A demo service

Here's a small service running a timer updating every 3 seconds. On construction, the service instance creates a session in Consul. Every time the CallTime-function is triggered, we check if we hold the lock. If we do, we display the time, otherwise we print "Not the leader". When the service is stopped, we destroy the session so the other nodes won't have to wait for the session TTL to end.

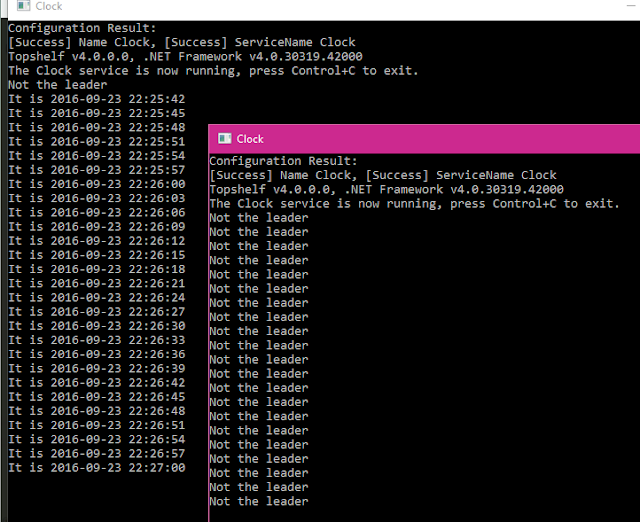

When two instances of this service are started, we get this result. One node is active and the other one is idle.

When the previous leader is stopped, the second node automatically takes over the leadership and starts working.

All in all, quite a nice solution for securing the running of those necessary batch services. :)

using System;

using System.Threading;

using System.Threading.Tasks;

using Consul;

using Topshelf;

using Timer = System.Timers.Timer;

namespace ClockService

{

class Program

{

static void Main(string[] args)

{

HostFactory.Run(x =>

{

x.Service(s =>

{

s.ConstructUsing(name => new Clock());

s.WhenStarted(c => c.Start());

s.WhenStopped(c => c.Stop());

});

x.RunAsLocalSystem();

x.SetDisplayName("Clock");

x.SetServiceName("Clock");

});

}

}

class Clock

{

readonly Timer _timer;

private IDistributedLock _session;

public Clock()

{

var consulClient = new ConsulClient();

_session = consulClient.CreateLock("service/clock");

_timer = new Timer(3000);

_timer.Elapsed += (sender, eventArgs) => CallTime();

}

private void CallTime()

{

Task.Run(() =>

{

_session.Acquire(CancellationToken.None);

}).GetAwaiter().GetResult();

Console.WriteLine(_session.IsHeld

? $"It is {DateTime.Now}"

: "Not the leader");

}

public void Start() { _timer.Start(); }

public void Stop()

{

_timer.Stop();

Task.WaitAll(

Task.Run(() =>

{

_session.Release();

}),

Task.Run(() =>

{

_session.Destroy();

}));

}

}

}

When two instances of this service are started, we get this result. One node is active and the other one is idle.

When the previous leader is stopped, the second node automatically takes over the leadership and starts working.

All in all, quite a nice solution for securing the running of those necessary batch services. :)

No comments:

Post a Comment